Building the World’s First Parallella Beowulf Cluster

At last! This week we received the second batch of Epiphany FMC daughtercards to be combined with the Zynq boards that were waiting here for quite some time now (picture of which was posted on a previous Kickstarter update). So, we thought that now that we have eight virgin Parallella prototype machines, it was a great opportunity to build a Parallella cluster. This will serve two purposes – first, it will give some meaning to otherwise boring testing activity of the systems before we ship them our early adopters. And, better yet, prove that such clustering and harnessing the power of 144 CPU cores is indeed feasible!

After some thought, we decided to build a Beowulf cluster, utilizing MPI protocol for inter-board communications. The definition of a Beowulf cluster is somewhat loose, but it basically is a cluster of similar, if not identical, consumer grade computers connected in a network and utilizing the MPI protocol for information exchange. One of the computers is configured as a Master and the rest are slaves (see references for further reading). Two popular free MPI implementations are OpenMPI and MPICH2. But, before going to a full 8-node Beowulf cluster, we wanted to start on a smaller scale and used the two proto-boards that I have on my table.

Our Parallella prototypes were installed with Ubuntu 11.10 OS. First thing, we had to find the MPI package for the ARM. Luckily, the repositories do contain the required packages, so I installed the OpenMPI package. When everything seemed in place, mpicc was used to build a basic MPI “Hello-World” program. Subsequently, mpirun is used to run the first Parallella MPI program and … nothing ! The program gets stuck after pressing the [enter] key. It has to be killed from a second terminal, as it won’t respond to [Ctrl-C]. What a turn-off…

OK, if OpenMPI does not work, maybe MPICH2 would? Removed the OpenMPI package and installed the MPICH2 package – but unfortunately the result was the same. Nothing comes out after the [enter].

Now, as Gary Oldman said in The Fifth Element – “If you want something done, do it yourself. Yep!” Well, not quite, as we where not going to implement MPI ourselves, but rather we where going to build and install it from the source package. I downloaded the latest release from www.open‑mpi.org, configured the makefiles with mostly default configurations:

$ ./configure --prefix=~/work/open-mpi/install \

--enable-mpirun-prefix-by-default \

--enable-static

and built the library using make all. It took two attempts (and about an hour) to actually finish the build, and once it was finished, sudo make install was performed to arrange the files and libraries in their destination directory.

Last thing required was to update .bashrc with the paths to the MPI programs and libraries. [On a side note, at this time, the eSDK that we released for the Parallella computer requires sudo privilege whenever using the Epiphany device. This will change when we find the time to implement a proper device driver but for now this is what it is. Due to this, the environment of the shell invoking the host program is masked out in the host program (this is Ubuntu’s security measure), so one needs to explicitly set $PATH and $LD_LIBRARY_PATH on the line invoking the host program. Thus, updating .bashrc is not really useful now, as these definitions are not carried on to the program.]

MPI works over SSH, so in order to eliminate the need to type a password each time a program runs on the cluster, we had to set up a secured tunnel. Following the instructions from the tutorial in the reference, this was done, and now we were finally ready for the test:

$ mpirun -np 2 --hostfile ~/.mpi-hostfile ./hello.e Task 1 is waiting Task 0: Sent message 103 to task 1 with tag 1 Task 1: Received 1 char(s) (103) from task 0 with tag 1

Voila! We had a working Parallella MPI environment. After some online research we learned that there was actually no functional MPI release for ARM / Ubuntu 11.10 and this explains why the repository packages did not work. It should work, however, for 12.04.

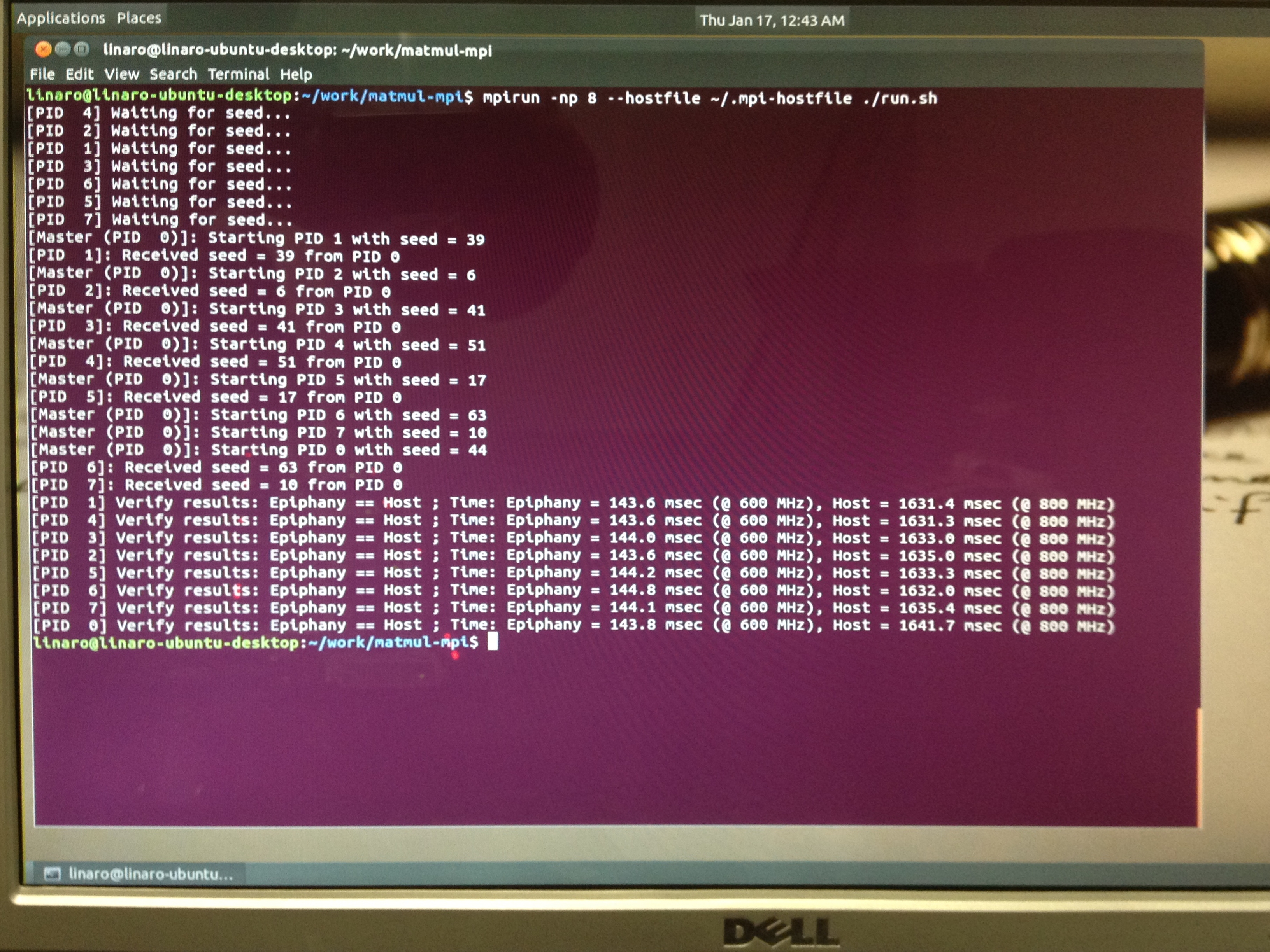

Next task was to engage the Epiphany device in the loop. We decided to modify the matmul-16 example program from the eSDK to run in an MPI environment. To do that, I wrapped the matmul host program with a modified version of the Hello-World program. The Master node generates 8 random seeds, 7 of them are sent to the Slave nodes, used to generate different operand matrices on each node in the cluster. Then, each node computes the product of the operand matrices on the Epiphany and on the host as reference. The results are compared for correctness and appropriate messages are printed.

Now, the final step is to deploy the eSDK, MPI package and the matmul-mpi program to the eight node cluster that we connected in advance. Using a little shell scripting, the eSDK and MPI installation directories were copied to the clean machines. The matmul-mpi binaries were copied as well. Now all 8 machines look identical. This is how the cluster looks like:

Trying to run the program on a single machine resulted with an unexpected error – the loader complains that the libibverbs.so.1 shared library was missing! Why did it run on the test machine but not on the Cluster?? Ah! It was the previous installations of OpenMPI and MPICH2 that installed the ibverbs package as a dependency! A quick apt-get install on the Cluster machines solved this problem too.

Setting up SSH tunnels from the Master to the seven Slaves also ensures that we don’t need to type the passwords every time we run the program. The final result was just awesome, showing eight 512×512 matrices multiplication being performed in parallel on the Master and on the seven Slaves. Here’s a screen capture:

This sums up the effort of building the world’s first Parallella Beowulf cluster. We demonstrated how a high performance cluster can be constructed from discrete Parallella computers and a couple of network switches. On the way we also tested the hardware before submission to their new owners. The next day, we wrapped up the boards and went to the nearby post office to ship these boxes:

End note – there is an MPI dedicated Forum on the Parallella Community site. A few days ago we got the great news that David Richie of Brown Deer Technologies, who provides us with the OpenCL environment, has demonstrated a working MPI application on the Epiphany device . This is a great achievement, and maybe sometime in the future it will be possible to extend the Parallella MPI cluster to include the Epiphany MPI cluster as well?

References for further reading:

http://en.wikipedia.org/wiki/Message_Passing_Interface

http://en.wikipedia.org/wiki/Beowulf_%28computing%29

http://techtinkering.com/2009/12/02/setting-up-a-beowulf-cluster-using-open-mpi-on-linux

http://ibiblio.org/pub/Linux/docs/HOWTO/archive/Beowulf-HOWTO.html

http://www.tldp.org/HOWTO/Beowulf-HOWTO