Face Detection using the Epiphany Multicore Processor

Introduction:

High quality face detection is a non-trivial application. Fortunately extensive high quality open source C/C++ code is available for image processing through the OpenCV library. OpenCV (Open Source Computer Vision Library) is a library of programming functions aimed at real-time computer vision and is freely available under an open source BSD license.(http://www.opencv.org)

High quality face detection is a non-trivial application. Fortunately extensive high quality open source C/C++ code is available for image processing through the OpenCV library. OpenCV (Open Source Computer Vision Library) is a library of programming functions aimed at real-time computer vision and is freely available under an open source BSD license.(http://www.opencv.org)

The OpenCV 2.x library supports two kinds of face detection algorithms: the Haar approach[Viola2001] and Local Binary Pattern(LBP) approach [Liao2007]. The Haar method provides higher quality results but has substantially higher processing requirements and program footprint.

A major challenge in working with OpenCV is the large footprint of the library. The library is written in C++ and really not well suited for the Epiphany architecture with its 32KB of local memory per core. The good news is that most image processing applications can leverage the OpenCV library for functionality like camera interfaces and non-critical image processing and will only need custom software for a small portion of the application (10/90 rule). In our face detection example, we chose to leverage the high level OpenCV functions for high level application functions while completely rewriting the inner loop LBP based tile processing kernel in ANSI-C and bypassing the OpenCV framework.

Code:

Project source code:(face_detect.zip)

Algorithm Development:

The LBP face detection algorithm consists of the following basic stages:

1. Generation of scaled image pyramid: the detector itself can detect only fixed-size objects. To detect objects of larger size the image is scaled down multiple times to form image pyramid.

2. Preparing integral image for pyramid level, scanning image and calling detector to analyze small image fragments (24×24 pixels in our case).

3. Analyzing each image fragment using classifier. Classifier data instructs classification code how to perform objects validation/rejection.

4. Objects on image are usually detected at multiple positions/scales which are near to each other. So, positive detections are usually looks like clouds of rectangles which need to be grouped to be useful. In opposite, false-positive detections are usually standalone and should be removed at grouping step.

There are many intricate details involved with face detection, but that is beyond the scope of this short white paper. The complete reference source code is included at the end of this article. Anyone who is interested in learning more can explore the source code or refer to one of the many reference available on the subject.

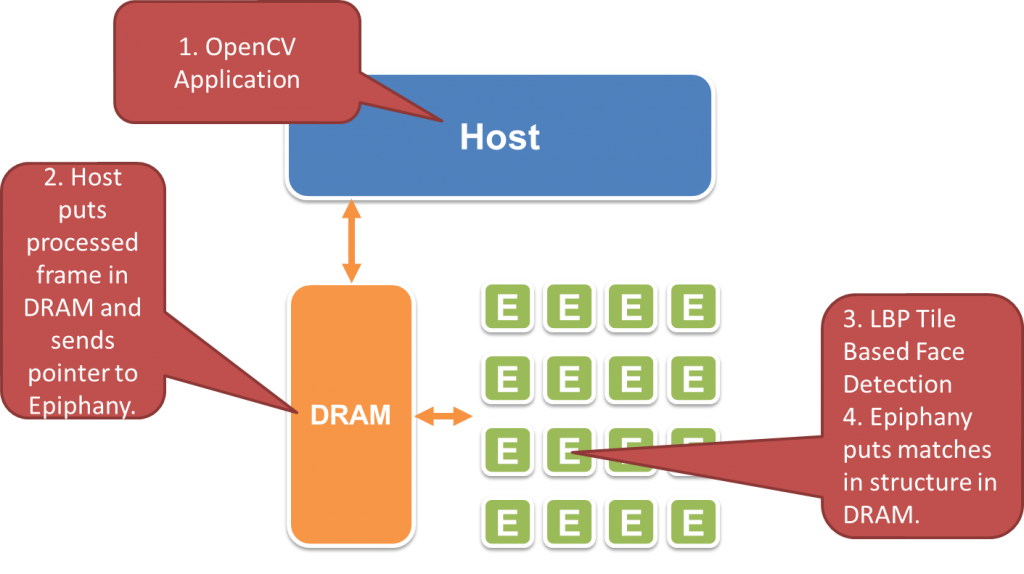

Accelerator Model

The following section describes the simple task queue model used to accelerate the face detection application.

Host algorithm:

1. Send classifier data to shared memory.

2. Prepare image pyramid (a few images with gradually reducing size).

3. Send pyramid to shared memory.

4. Prepare tasks queue and send it to shared memory. Each task consists of coordinates of an image tile that a core should process. Tiles are slightly overlapped in order to not miss potential detections in areas close to the tile borders.

5. Send «start» signal to shared memory and wait until all tasks have completed be processed.

6. Read and process results from shared memory.

Epiphany Accelerator Algorithm (per core):

1. Wait «start» signal in shared memory.

2. Transfer classifier data from shared memory to core memory.

3. Get next unprocessed task from tasks queue (this part is protected by mutex). If queue is empty

then go to step 1.

4. For each task, complete the following steps:

a) Transfer image tile required for processing from shared memory to core memory.

b) Perform object detection on tile data using available classifier (cascade using LBP features).

c) Write results back to shared memory in place of task.

5. Go to step 3.

Results:

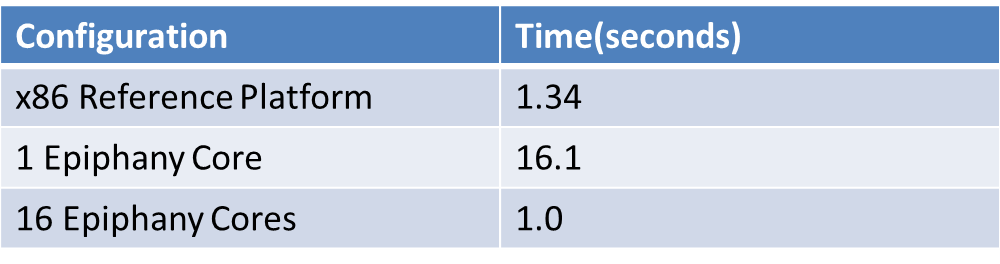

Experiment 1:

In this experiment only single core at Epiphany system was enabled. In all experiments it was used image having resolution 1600×1065 pixels, mentioned in the previous report. Time measured at host: 0.038 seconds for pyramid building and 16.46 seconds for waiting. Time measured at core: 16.08 seconds.

Experiment 2:

In this experiment all 16 cored were enabled. Time measured at host: 0.039 seconds for pyramid building and 1.08 seconds for waiting. Time measured at cores: 1.0±0.01 seconds.

Reference Experiment:

The same face detection code-base was also exclusively on the host processor for comparison. All code (including data transfers) is run by single core of host CPU. Only host memory was used. The processor used for the experiment was an x86 based GHz class CPU. Time measured at host: 0.032 seconds for pyramid building and 1.336 seconds for waiting. Time measured at «core»: 1.312 seconds.

Notes:

Time of data transfers between host and shared memory was not measured because the evaluation kit used for this demonstration only had a transfer speed of approximately 100KB/s. In the Parallella system, the bandwidth between the host CPU and the Epiphany accelerator will be over 1.4GB/s. All data transfers between shared and Epiphany local memory banks were done using DMA memory transfers. It is important to note that the demo is not intended to show the peak performance of the Epiphany floating point processor. The byte wide processing in this example is not ideal for the Epiphany processor but on a per Watt basis, the Epiphany processor till sbeats the x86 based processor by a wide margin.

Conclusions:

- The Epiphany can accelerate a useful application.

- The Epiphany is a “smart” accelerator that can complete large tasks independent from the host.

- The Epiphany supports true task based parallelism, making it a broadly applicable offload engine.

- The Epiphany is fairly easy to use judging by the modest 7 week development time for this complex example.

- The Epiphany performance scales up nicely from 1-16 cores (at least in this example).

- Performance numbers in this application do not reflect the TRUE power of the Epiphany architecture because the software uses 8 bit data and the current chips are optimized for 32 bit integer/floating-point computation.

Acknowledgement:

This work was done in collaboration with CVisionLab.